Stay Up To Date With Us!

We will never spam you or share your email address.

Game Sound Design Strategies

GSD StrategiesCheck out the gamesounddesign.com strategies when you are feeling creatively uninspired. Each random strategy will present you with a new avenue to pursue. Give them a try!

Game Sound Design Glossary

GSD GlossaryOur game audio glossary has all the sound terms you have been wondering about. Game audio can be confusing enough without having to deal with a new technical language. We are constantly updating the database with new terms that relate to not only game audio but game developer terms as well.

Making Music Interactive: Elaboration of the Feature Set in Wwise Part 2

Article by Louis-Xavier Buffoni

This Is The Second Part In The Series. If You Have Not Read The First Part Start Here.

The Music Engine

We need to design a set of objects (similar to Sounds and Containers) that will allow us controlling the way music should respond to events, state changes and RTPC. The objects of the Interactive Music will henceforth be called Music Objects. We tried to integrate these music objects as close to Wwise's paradigm as we could, to take advantage of the features already available in the Actor-Mixer hierarchy without effort.

Our goal was to provide tools to create interactive music designs that are out of this world. But as we mentioned in the beginning of this article, the definition of interactive music is large, vague and eclectic. We know by experience that a feature set as large as the problem it tries to solve, one that tries to address every little issue separately, independently, without a unifying thread will be counterintuitive, unusable, costly to implement, and end up being a disaster. So we focused on being simple, efficient, and generic, while addressing the most issues as we could.

The feature set was inspired by requests and reports from interactive musicians. We tried to merge them all into one consistent and quite simple feature set. Needless to say, this process was highly iterative. This article presents the motivations behind most of its components, as straightforward as possible. Classifying interactive music's conceptions into two distinct approaches was a good start. Let's start from there.

Elements of the Layering Approach

Description

As explained previously, the layering technique consists in having many tracks that play together in sync, with independent control over each of them. Track control involves muting, changing volume, and filtering or other audio effect parameters, like the gain of distortion for example. Changing these properties can be done by continuous or discrete control.

Discrete control would be done through States, where the properties of tracks would change to the values that are bound to this state. Thing is, game state can change at any given moment, but track properties should change only when it is musically appropriate to do so. It could be at the end of the bar, or at the end of the chorus. We need to be able to set rules that define when property changes are allowed to be applied.

Music Track

Let's begin by defining the Music Track. Music tracks are placeholders for audio clips, similarly to audio tracks found in all standard sequencers. They are full-fledged Wwise objects, and thus inherit all basic properties: individual control on the volume and low-pass filter through event actions or RTPC, insert effects, bus routing, state group registration, and so on.

Music Segment

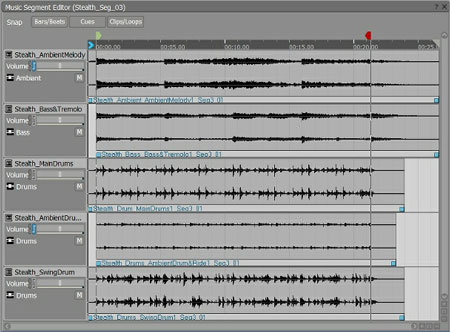

Next, we need a container for these tracks, an object that would play them all in sync: the Segment. Then we designed a sequencer-like graphical user interface that would permit editing segments: creating tracks and laying down audio clips (see screenshot below).

To make our Segment interactive, we use game syncs to control individual track properties at run-time. Everything is already there for continuous control. The game monitors any aspect of the game, and sends the value to the music engine using an RTPC. According to the music design, this value is mapped to any property: the volume or low-pass filter of a track, the parameter of an insert effect (like the amount of distortion for example) on another track, and so on. Any number of properties can be registered to a game sync through an RTPC, using different curves. For example, a single game parameter called "life" could simultaneously influence a low-pass filter's cut off frequency on a drum track (more filtering when "life" is greater), the volume of a separate ride cymbal track (smaller "life" makes it louder), and the presence of a menacing cello track when it is lower than a certain value.

Segments are interactive in regard to continuous change (RTPC), but not quite yet in regard to discrete changes (States). As mentioned earlier, properties may only change according to musical rules. This involves three things:

1) Segments need to be aware of the music timing (meter): tempo and time signature.

2) With certain songs, it might be necessary to identify more strict locations where property changes are musically correct. Therefore, users should be able to identify strategic locations where property changes will be allowed to occur.

3) Objects that are registered to a State Group need to specify when the property change will effectively occur, hence the concept of "delayed state change". Choices are Immediate, Next Beat, Next Bar, Next Cue, and so on.

Instrument-Level Variation

Although one Segment can constitute a whole song, it would be convenient if we could split up the song into several parts (segments), and play them back to back, possibly looping one part over itself a couple of times. We invented a container in which we can prepare a playlist of different segments, specifying which segments play, in what order, and how many times each of them loops. This container is called the Music Playlist.

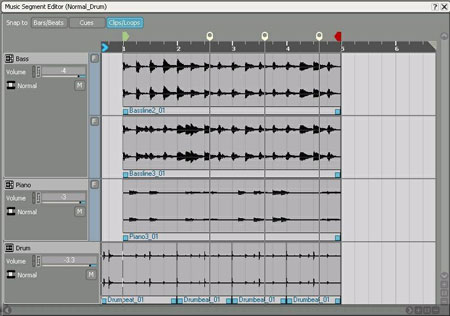

Furthermore, some parts may be very similar, but only having some tracks/instruments that vary slightly. This is known as "instrument-level variation" (2). Instrument-level variation is a way to generate more music with less audio content. In order to do this, we came up with Subtracks: different audio content within a single track object. Every time a segment is played, one subtrack for each track is selected and played back. However, all subtracks share the properties of the track. The screenshot below illustrates this.

For example, you can add some variation to the drums track, while keeping the same bass line. To do this, put the segment in a looping playlist, and create as many subtracks in the drums track as there are drums variations. Every time the segment is played, a new sequence of drums is selected.

Dovetailing

Playing segments back to back is not as simple as it seems. If you render a song from your favorite sequencer, cut it into parts, and play them one after the other sample-accurately, it will sound fine. But if you jump from one random part to another, the notes that were playing at the time of the cut might shut off abruptly, and/or the reverberation tail of the audio content that was playing may not match that of the new content. In order to be able to successfully connect segments together, a part of audio should overlap across both segments, like dovetails.

If our tracks actually contained MIDI events, then the synthesizer would play the release of the envelope of the notes that were playing at the time of the cut while it would start playing the MIDI events of the new segment. Dovetailing would occur naturally. This is not the case with pre-mixed audio. We must provide a solution for this.

We decided that a segment would have a region at its end that would overlap the next segment. The logical end of the segment would be marked with the Exit Cue, and the region that follows would be known as the post-exit. Likewise, the beginning is marked by the Entry Cue, and preceded by the pre-entry. A segment's exit cue is synchronized with the next segment's entry cue. The figure below illustrates this.

Dovetailing is fundamental to the connecting approach.